Why Data Quality Makes or Breaks Your AI

Explore why data quality is paramount for successful AI implementation in mid-sized businesses. Learn about the core components of data quality and actionable strategies to overcome common challenges.

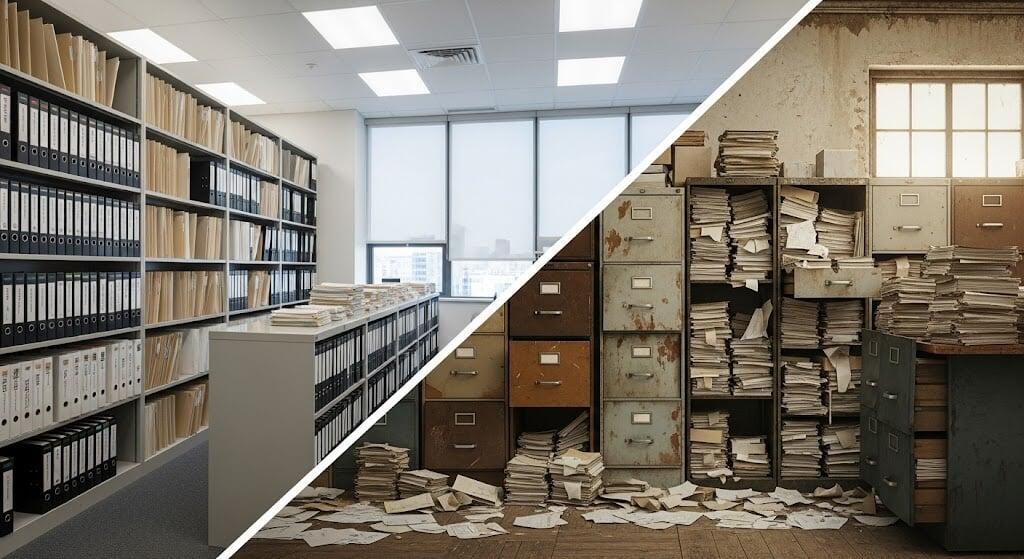

In a recent post, we unveiled the "AI Magic Trick," highlighting how data serves as the true, often unseen, star behind every successful Artificial Intelligence initiative. We explored the concept of data readiness as the meticulous preparation and precise execution that allows AI to perform its dazzling feats. But just as a magician's tools must be flawless and the chef’s ingredients pure, the effectiveness of AI hinges on one critical, foundational element: data quality. It's the unseen imperfection, the subtle flaw in the raw material, that can silently undermine even the most ambitious AI projects, turning potential breakthroughs into costly disappointments.

For many mid-sized organizations and their lean IT teams, the allure of AI is undeniable. The promise of enhanced productivity, smarter decision-making, and streamlined operations is a powerful motivator. Yet, the path from pilot to production is frequently fraught with unseen obstacles. Often, these obstacles aren't complex algorithms or sophisticated infrastructure challenges, but rather the insidious, pervasive issue of poor data quality.

Consider the analogy of a master chef. They might possess the most advanced kitchen, the latest culinary techniques, and a brilliant recipe. But if their ingredients are spoiled and stale,or incomplete – if the "purity of ingredients" is compromised – the resulting dish will inevitably fall short, regardless of the chef's skill or tools. In the realm of AI, your data is that ingredient. If it's flawed, your AI's output will be, too

The Silent Saboteur

How Poor Data Quality Undermines AI

The consequences of subpar data quality are far-reaching and often more damaging than initially perceived. They don't just lead to minor glitches; they can fundamentally compromise the integrity, effectiveness, and trustworthiness of your entire AI ecosystem. This silent sabotage (queue the music: Beastie Boys, Ill Communication) can creep into every aspect of your AI initiative, turning promising ventures into frustrating dead ends.

The principle that "the output is only as good as the input data" is, of course, not new. This concept, famously encapsulated by the adage "Garbage In, Garbage Out" (GIGO), has been a cornerstone of data management since the very first databases. However, AI dramatically escalates the stakes and challenges of achieving and maintaining that quality. The demand for trustworthy data is higher than ever because the potential for both immense benefit and significant harm from AI is so much greater.

Inaccurate Insights: The Blindfold on Decision-Making: AI models learn from the data they're fed. If that data is inaccurate, incomplete, or inconsistent, the insights generated will be unreliable. Imagine an AI designed to predict market trends based on sales data riddled with duplicate entries and missing timestamps. Its forecasts would be skewed, leading to poor strategic decisions, misallocated resources, and missed opportunities. For CIOs and IT managers, this translates directly into a lack of trust from the business side, eroding confidence in AI's value proposition.

Bias Issues: The Unintended Echo Chamber: Data often reflects historical patterns and human biases. If your training data contains these inherent biases – whether algorithmic, historical, or due to prejudice – your AI models will not only learn them but can also amplify them. This can lead to unintended, negative outcomes in sensitive areas like hiring or customer service, creating significant ethical dilemmas, reputational damage, and even legal liabilities. Ensuring fairness in AI begins with scrutinizing the fairness of your data.

Degraded Performance & Bloated Costs: The Endless Treadmill: Low-quality data is a resource drain. IT teams find themselves in a constant cycle of "data wrangling" – cleaning, correcting, and transforming data manually before it can even be used. This isn't just inefficient; it's expensive. AI models trained on poor data also tend to degrade in performance over time, requiring continuous re-training and maintenance, further inflating operational expenses and delaying any tangible return on AI investment.

Compliance Risks: The Regulatory Minefield: In an era of escalating data privacy regulations (like GDPR, CCPA, HIPAA, and emerging AI-specific acts), using non-compliant or unsecured data can lead to severe penalties. Feeding sensitive, unmasked, or improperly handled data into an AI system without proper governance can result in data breaches, privacy violations, and hefty fines that can cripple a mid-sized organization. The reputational damage alone can be irreparable.

A recent Salesforce study highlighted that 92% of IT and analytics leaders believe the demand for trustworthy data has never been higher, with 86% agreeing that "AI’s outputs are only as good as its data inputs." Yet, data quality issues persist, often due to a lack of resources or clear policies.

The Core Components of Data Quality

A Practical Guide for Lean Teams

Achieving high data quality isn't about perfection overnight; it's about establishing practical, sustainable processes. For mid-sized organizations with lean IT teams, the focus should be on incremental improvements and leveraging smart strategies. Here are the core components:

1. Cleaning: Sweeping Away the Digital Dust

Data cleaning involves identifying and correcting errors, inconsistencies, and inaccuracies in your datasets. This includes fixing typos, standardizing formats (e.g., ensuring all dates are in YYYY-MM-DD format), and correcting misspellings.

Actionable Advice: Start small. Identify the most critical datasets for your initial AI use cases. Utilize automated data profiling tools (many are available as open-source or affordable SaaS solutions) to quickly identify common errors. Prioritize fixing errors that have the highest impact on your AI's performance. For instance, if your AI is analyzing customer demographics, ensure age and location fields are consistently formatted.2. De-duplication: Eliminating the Echoes

Duplicate records are a common scourge, leading to inflated numbers, skewed analyses, and wasted storage. De-duplication involves identifying and merging or removing redundant entries.

Actionable Advice: Implement clear rules for identifying duplicates (e.g., matching on multiple fields like name, email, and address). Consider using master data management (MDM) principles, even at a simplified scale, to create a "golden record" for key entities. For mid-sized firms, this might involve a phased approach, starting with customer or product data.

3. Accuracy: The Truth in Every Byte

Accuracy refers to the degree to which data correctly reflects the real-world entity or event it represents. Inaccurate data is fundamentally misleading.

Actionable Advice: Establish data validation rules at the point of entry. Use dropdowns, constrained input fields, and automated checks to prevent incorrect data from entering your systems. Regularly audit key datasets against reliable sources to identify and correct inaccuracies. Encourage data owners (business users) to take responsibility for the accuracy of their data.

4. Completeness: Filling in the Blanks

Incomplete data, characterized by missing values in critical fields, can cripple AI models that rely on comprehensive information.

Actionable Advice: Define which data fields are mandatory for specific business processes and AI applications. Implement processes to flag and address missing data, either through automated imputation (filling in missing values based on other data) or manual enrichment where necessary. For example, if a customer's industry is crucial for a predictive model, ensure that field is always populated.5. Consistency: Speaking the Same Language

Consistency ensures that data is uniform across different systems and over time. Inconsistent data might use different units of measurement, varying codes for the same entity, or conflicting definitions.

Actionable Advice: Develop and enforce data dictionaries and glossaries across your organization. Standardize naming conventions, codes (e.g., for product categories or customer segments), and data types. This ensures that when different systems or departments refer to the "same" data point, they are truly referring to identical information.6. Timeliness: The Freshness Factor

Timeliness means data is available when needed and is sufficiently current for its intended use. Stale data can lead to outdated insights and poor decisions, especially in dynamic environments.

Actionable Advice: Establish clear data refresh rates based on the needs of your AI applications. Implement automated data pipelines to ensure data is ingested and processed in a timely manner. For real-time AI applications (eg, fraud detection), prioritize streaming data processing over batch processing

7. Validity: Conforming to the Rules

Validity ensures that data conforms to predefined rules or constraints. For example, a "date of birth" field should only contain valid dates, and a "customer ID" should adhere to a specific format.

Actionable Advice: Implement strong data validation checks at every stage of the data lifecycle, from input to integration. Use regular expressions, lookup tables, and logical checks to ensure data adheres to its defined structure and acceptable values.Overcoming Barriers

Data Quality in a Mid-Sized World

Mid-sized organizations often face unique challenges in their quest for data quality:

Lack of Dedicated Resources or Specialized Skills: Lean IT teams are stretched thin, often juggling multiple priorities without dedicated data quality specialists.

Fragmented Data Policies or Inconsistent Practices: Without a centralized data governance strategy, different departments might collect and manage data in their own ways, leading to inconsistencies.

Siloed Data Sources: Data trapped in disparate systems makes it difficult to get a holistic, consistent view, complicating quality efforts.

The key to overcoming these barriers lies in a pragmatic, phased approach. Start by identifying the highest-impact data for your most critical AI initiatives. Leverage readily available tools and automation where possible. Foster a data-aware culture across the organization, emphasizing that data quality is a shared responsibility, not just an IT problem.

Data Quality: The Foundation of AI Success

Read more about the importance of Data Readiness in AI:

The AI Magic Trick: Why Your Data is the Real Star of the Show